By Jane Berg and Tamara Enz June 6, 2025 What is the Internet of Animals? For…

NASA Funds Segal Rozenhaimer’s Machine Learning Research on Coral Reefs Remote Sensing Imagery

NASA has agreed to fund a team of scientists (Dr. Ved Chirayath-PI, Dr. Alan Li-Co-I, Dr. Kamalika Das, Co-I), including Dr. Michal Segal Rozenhaimer from BAER as Co-Investigator to study aquatic ecosystems, particularly coral reefs using recently developed remote sensing technology to be deployed in satellites and aircraft. Using these instruments, the scientists will determine coral reef ecosystem vitality and biodiversity at unprecedented spatial and temporal scales.

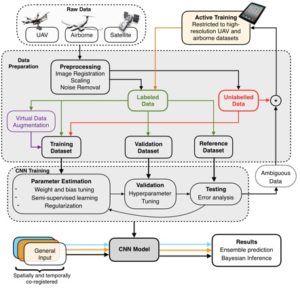

The project, called NeMO-Net (The Neural Multi-Modal Observation & Training Network for Global Coral Reef) will attempt to combine data collected by NASA’s FluidCam instrument, Coral Reef Airborne Laboratory (CORAL), and the satellite-based Moderate Resolution Imaging Spectroradiometer (MODIS) to characterize fundamental coral reef health indicators. FluidCam, which was developed by Dr. Ved Chirayath (NeMO-Net PI) provides centimeter-scale, distortion-free, 3D underwater images remotely sensed from aircraft. CORAL uses the Portable Remote Imaging Spectrometer (PRISM) instrument aboard a Gulfstream-IV (G-IV) aircraft to produce lower-resolution images, while the MODIS satellite sees every point on our world every 1-2 days in 36 discrete spectral bands. NeMO-Net will build off of current data fusion methodology and develop an open-source CNN (convolutional Neural-Network) for predicting percent living cover, mapping of spatial distribution, and segmentation of morphology type of the corals from fusion of multiple remote sensing data.

Dr. Michal Segal Rozenhaimer earned a Ph.D. in Civil and Environmental Engineering from Israel Institute of Technology in 2011. Her research interests include hyperspectral remote sensing,

application of statistical and machine learning methods in Atmospheric Science, atmospheric composition, including air quality and long range transport.

In the NeMO-Net project, UAV, airborne, and satellite data is preprocessed, then split into labeled and unlabeled categories. Relevant training, validation, and reference sets are created and fed into the CNN training process. Ambiguous data sets are fed back into an active training section for active learning. The final CNN model takes spatially and temporally co-registered datasets, if available, and outputs predictions based upon ensemble or Bayesian inference techniques.ill ichal Rosenhaimern –ds in At sted by ll determine oyed in satellites and aircraft.