BAERI’s Emma Yates is part of a small group of research scientists who look at…

Coral Reef Conservation through Fluid Lensing, Machine Learning, and Becoming a Sea Turtle

By Rachel Sender

A fire coral before and after bleaching. Bleaching has become more prevalent in coral reefs as global warming causes the oceans to warm. Image: XL Catlin Seaview Survey.

A Daring Drone Delivery

In 2019, over the waters off the coast of Guam, a large black insect-like DJI M600 drone with six long propellers was being flown by a team of NASA and Bay Area Environmental Research Institute (BAERI) researchers as part of a project called NeMO-Net. This joint effort with the University of Guam and the Guam government via Dr. Romina King was tasked with mapping and imaging Guam’s coral reefs. NeMO-Net, which stands for Neural Multimodal Observation and Training Network for global coral reef assessment, is a neural network tasked with identifying the species of coral that make up the world’s reefs with the help of video-gamers.

Jarrett van den Bergh, a software engineer and member of the team, had stayed behind in California for this mission but received an urgent text at 6:00 a.m. Pacific time on that fateful day: two of the drone’s redundant batteries had failed, necessitating an “emergency water landing”—in other words, a controlled crash into the water. A new drone was needed in Guam by the next day. He made some calls and rushed to NASA’s Ames Research Center in Moffett Field, California, to see what he could do.

A DJI M600 drone flying over Puerto Rico. Image: NASA.

While one failed mission may seem trivial in the grand scheme of things, van den Bergh knew the importance of what he and the team were setting out to do—map and classify all of the world’s coral reefs. After all, it is impossible to understand the full effects of climate change on the diversity of life on Earth without knowing the extent of that diversity as it currently stands.

The team had an ambitious goal ahead of them, and to achieve it they would employ machine learning to combine NASA’s supercomputing power with an innovative imaging technology called fluid lensing. Fluid lensing uses water as a magnifying lens to look down through ocean waves to see deeper. To image and classify coral reefs, large amounts of high-quality data had to be collected and analyzed, which presented a challenge. The sheer magnitude of the oceans’ size and depth called for enormous brain power to tackle this task.

NeMO-Net launched as a response to this immense undertaking at the onset of the COVID-19 pandemic, and Ved Chirayath, the project’s principal investigator and an enthusiastic scientist, recalls people questioning why resources should be given to the exploration of coral reefs at such a tumultuous time for humanity. With a serious urgency and directness, Chirayath says, “It was a fair point, and I said, ‘You know, I guarantee you that the next drug to treat COVID will probably come out of a coral reef.’’’ That prediction was made true about six months after the project launched. “A drug that [is] 30 times more effective than remdesivir [an antiviral drug originally developed to treat hepatitis C and later used to treat COVID-19] came out of one of the reefs that had been surveyed using our technologies.”

Back in California, van den Bergh was doing everything he could to ensure the Guam mission would be a success. “My car was broken, so I was Ubering everywhere, and I couldn’t contact my team,” he recalls. The time difference between van den Bergh and the team in Guam was 17 hours, so at this point in his day, they were asleep, and he was making decisions on the fly. Thankfully, after many calls, he found a drone owned by a different research group. He ran across the Ames campus to a large hangar, unknown to him. A sign outside warned: TRESPASSERS WILL BE PROSECUTED. Wondering if he had the right building, van den Bergh entered and was greeted by a man who said, “Yeah, I have that drone.”

After offering heartfelt assurances that nothing bad would happen to this one, van den Bergh grabbed a forklift to retrieve the big drone, about the size of a large moving box, off its storage shelf, called an UberXL, and went to the airport: the only way he could think of getting the new drone to Guam in time was to bring it as (oversized) checked luggage on a flight.

To the surprise and relief of the team in Guam, van den Bergh appeared at their site the next morning, new drone in tow. Chirayath recalls excitedly welcoming van den Bergh before noticing that all he had with him was the drone. “Jarrett, where’s your suitcase?” Chirayath laughs, “It was quite comical; we had to go get him a bunch of things.”

The Dire Importance of Reefs

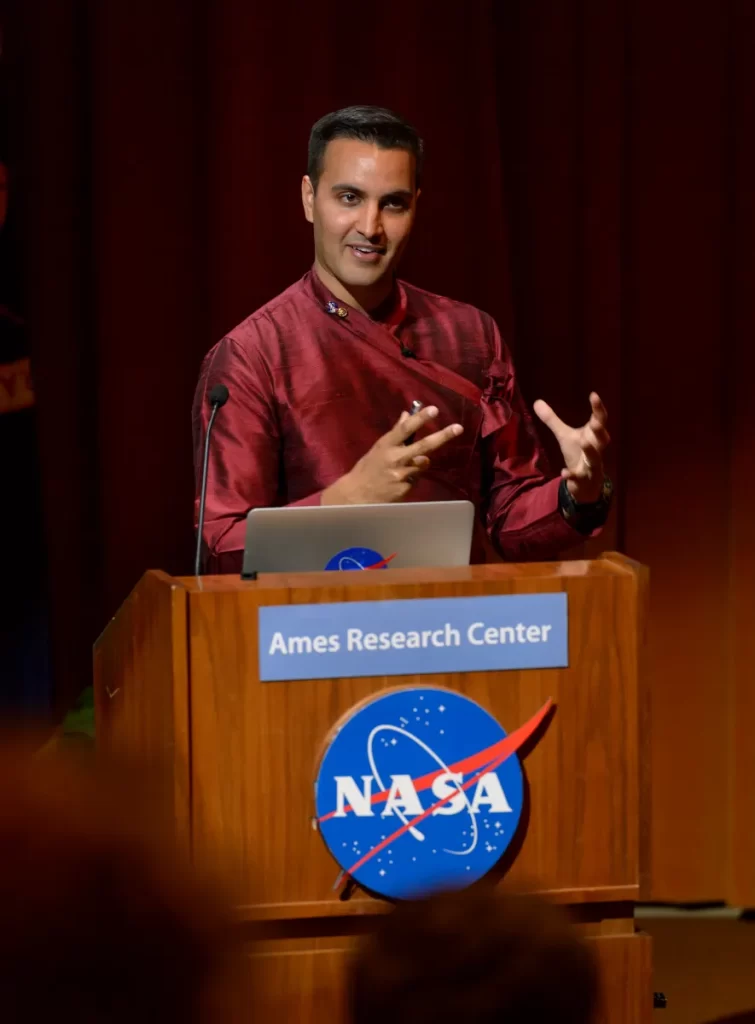

When I interviewed Chirayath, he was in his office. He wore a fitted black sweater and had slicked back hair and a subtle sparkle from a diamond stud earring. Chirayath told me about how he began his career as a theoretical astrophysicist interested in the search for life in space.

Ved Chirayath. Image: NASA.

He was brought to NASA to work on a software he created called atmospheric lensing, which, as the name suggests, uses the atmosphere as a lens to look up into space more clearly. Around this time, he met oceanographer Dr. Sylvia Earle, who brought to his attention the lack of visual data for the ocean: over 70% of Earth is covered by water, but as of 2017, when this conversation occurred, less than 5% of that space had been mapped at a resolution of 1 m or finer. For perspective, the entire surfaces of Mars and the Moon have been mapped (though only at a resolution of 30 m). Traditionally, coral reefs are mapped using passive remote sensing and lidar, but the resulting images are low resolution—due to disturbances in the sensing signals by ocean waves—and the process is slow. This gap in information inspired Chirayath to turn his sights back to Earth. “Mars and the Moon are interesting, but they don’t have a direct impact on our survival as a species.” Changes in the ocean are known to have contributed to mass extinctions in Earth’s history, Chirayath continues to explain. Coral reefs support over 25% of all ocean life, so mapping and classifying them is vital.

He reflected on this real threat to his home planet and decided to reorient his research path. “I was like, OK, I need to double down and try to solve this [mapping] problem.” So, using the work he had done on atmospheric lensing, Chirayath set out to develop a similar technology for looking through water.

Fluid Lensing: Harnessing the Visual Power of Water

A huge hurdle of mapping coral reefs has been visibility. Light travels differently through water than air, bending and not reaching as far. We can all relate to the difficulty of seeing through water. When we stand in a swimming pool, for example, our bottom half appears inches removed from our top, distorted by the way water bends light. Or looking down through deep water, past the limits of light’s shortened reach, we wonder what is in the dark unknown under our treading feet.

With fluid lensing, Chirayath was able to leverage the unique effects of ocean waves to gain images of the ocean floor at a clarity greater than if there had been no water.

The arc of a wave is a strong magnifier. The moment it passes over an area, that area will be visible from above at a high resolution, similar to how it appears through a microscope. Additionally, any sunlight that passes through the wave’s arc is magnified, becoming hundreds of times more powerful, reaching and illuminating much deeper. We see this phenomenon when bands of light move across pool floors.

Sunlight on the floor of a pool. Image: https://www.pexels.com/photo/a-boy-swimming-underwater-9044075/

For NeMO-Net, drones, each equipped with a high-powered digital camera, capture 3D remote sensing images of the ocean from above. Fluid lensing is then able to find moments of heightened visibility and piece them together to create a complete 1 m–resolution picture of the ocean floor as if the water weren’t there.

The fluid lensing hardware and software is also able to remove visual distortions unrelated to the ocean, like cloud coverage. The machine learning behind this important detail was contributed by Dr. Michal Segal-Rozenheimer, a senior research scientist at BAERI/NASA Ames.

Making the Data Useful

Solving the issue of ocean-floor visibility was only one hurdle of many for mapping coral reefs. Next, Chirayath and his team needed to figure out how to process, i.e., classify, the large amounts of data they were acquiring. Coral reefs are exceptionally complex, so seeing them isn’t enough to understand what’s there. The biodiversity needs to be meticulously identified as well, but that feat would take thousands of humans many years to accomplish.

“You go to the Amazon, and in one square meter you might have one species or 10 species, but in a coral reef … you might be looking at 1000 species in [the same] little area,” says Chirayath. Luckily, NASA has one of the most powerful supercomputers in the world, called Pleiades, designed to process vast amounts of information at speeds up to 7,000 times the average home computer. Chirayath and his team decided to build a neural network using Pleiades that could learn to identify all of the thousands of species found in coral reefs.

A neural network is a form of artificial intelligence inspired by the human brain. A team co-led with Chirayath by Dr. Alan Li, who was a research engineer at NASA Ames during NeMO-Net’s development, built algorithms that would allow NeMO-Net to teach itself coral reef identification.

Neural networks can’t learn from nothing, however. Large amounts of accurate information must be entered into the network for it to process and master. A great way to gather lots of data is to have the involvement of lots of people, which is how Chirayath came up with the idea to make NeMO-Net a community science game.

Accessing the Hive Mind

Enter the web- and game-design expertise of Jarrett van den Bergh. Van den Bergh, who balances Chirayath’s seriousness with a carefree tone and huge puppy smile, joined the NeMO-Net team in 2016, soon after its inception, to develop demos for training the neural network. He describes the process of building the game, which took extensive trial and error: “We spent a lot of time figuring out the right balance between a tool scientists could use versus something that was entertaining.”

Jarrett van den Bergh. Image: Ved Chirayath.

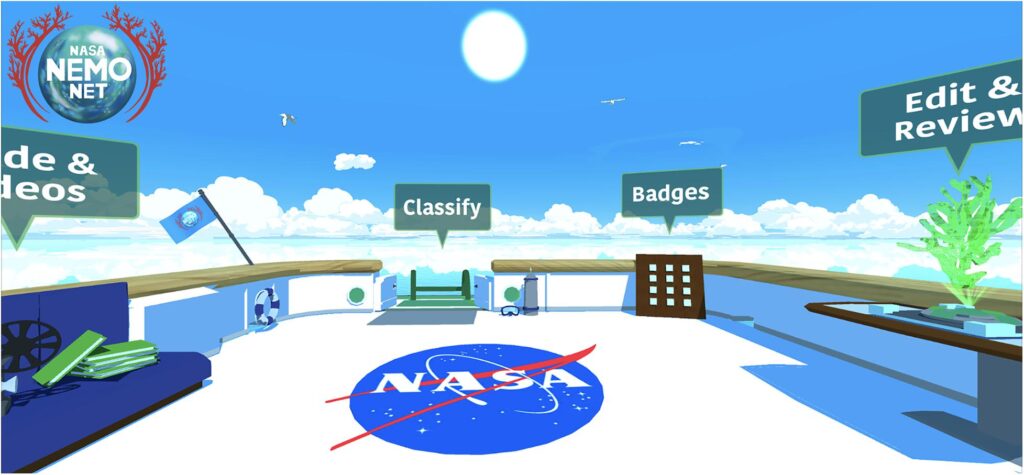

When the game is opened, the screen of your smartphone or tablet becomes an animated boat deck emblazoned with the NASA logo. The boat is surrounded by an ocean reflecting huge, fluffy clouds passing overhead. The sound of serene waves and peaceful music carries you through a tutorial that explains the features of the game before you dive into training.

Sunny day on the deck of the Nautilus, the virtual research vessel in the NeMO-Net game. Image: NeMO-Net – Gamifying 3D Labeling of Multi-Modal Reference Datasets to Support Automated Marine Habitat Mapping: figure 1, by van den Bergh et al., licensed under CC-BY 4.0.

Included within the initial game play is the training to identify objects in the game. This consists of coloring in grayed-out images of coral reefs, which were acquired from the fluid lensing drone images taken by the NeNO-Net team on their expeditions. Each color represents a different classification, whether a type of coral, rock, or man-made object. At first, only two colors are available, as you learn the most basic levels of classification.

NeMO-Net being played on a tablet. Image: Ved Chirayath.

When the game determines that you have mastered the first level, you earn your first badge, the clownfish, and are able to move on. As you progress through the game, earning more badges, more classifications are presented to you. Perhaps because of my small iPhone screen (the game was originally developed for the larger screen of a tablet), I have found moving past “clownfish” challenging, but I look forward to the progression of the game and learning more species of coral as I continue.

Once training is complete, you are able to swim through the coral reefs, seeing the ocean around you. As you earn badges, the animals they’re named for populate the ocean, enabling you to swim among 3D schools of fish, sharks, and whales. Information about all of the species you see is also easily accessible during the game by clicking on a sidebar and selecting the organism you’re curious about.

During our interview, van den Bergh was excited to learn the username that the game’s neural network assigned me: “Admiral Torpedo Shellfish.” He laughed with me at this subtle detail that helps a game with such an important, even intimidating, mission remain fun and injected with hints of whimsy.

According to Chirayath, NeMO-Net has a core following of 80,000, like prodigious middle-schooler Kellen Homan. In 2020, Homan became one of the best players in the world, out-classifying PhDs and reaching the coveted title of “sea turtle,” one of the highest within the game. Young players are common because of the educational nature of the game. Van den Bergh recalls one elementary school classroom that he visited while doing outreach where, as he left, he heard students saying they wanted to go back to “Nemo-Land.”

Players are often local to the regions where the game’s coral reefs are imaged, like Guam and Puerto Rico. They want to learn about their local reefs and play a part in their protection.

Looking Forward

Chirayath doesn’t see an end to this project. It is unknown how much of the 360,000,000 sq km ocean floor is covered by coral reefs, but Chirayath estimates about 300,000 sq km. The NeMO-Net team hopes to have 65,000 sq km of reefs, about 20% of the total estimated amount, mapped by this fall. Even after everything has been imaged, the work will not end: the team will continue rescanning all of the reefs so that changes in their ecosystems and biodiversity can be tracked. All the while, NeMO-Net is learning better classification skills from the game’s players, loyal and new, and chipping away at identifying the millions of lifeforms formerly hidden from view by the vast ocean waters.