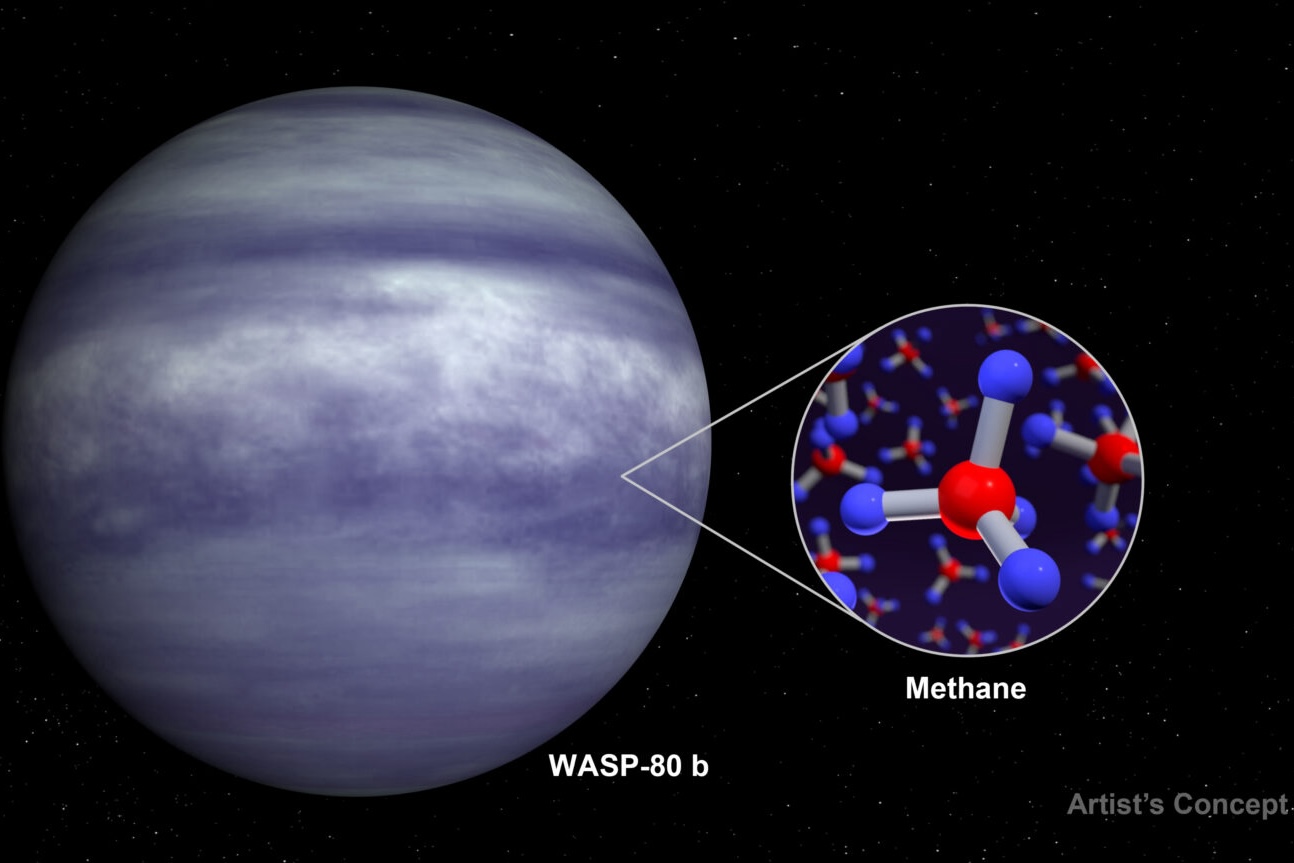

BAERI’s Taylor Bell and a team of researchers have identified the presence of methane in the atmosphere…

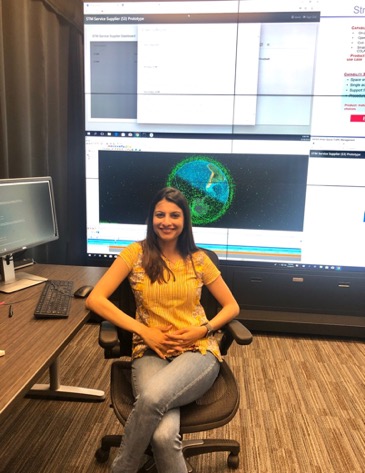

In Conversation, Women at BAERI: Dr. Sreeja Nag

Sreeja Nag is a Senior Research Scientist at the Bay Area Environmental Research Institute, NASA Ames Research Center. Her research focuses on how clusters of spacecraft may autonomously ‘talk’ and move while in orbit to make more accurate observations and better science decisions.

Tell me a little bit about your research.

My research involves designing distributed spacecraft and their autonomous operations. One theme focuses on distributed spacecraft autonomy, which is looking at how multiple spacecraft in orbit talk to each other and make reactive science decisions. If a spacecraft sees something of interest on the ground, it would be able to make inferences and predictions based on its observations. The spacecraft can then broadcast that knowledge to other spacecraft in the form of actionable metadata, so they can change their observation control strategies accordingly. Dynamic control based on inter-spacecraft coordination can maximize existing space assets because they can adaptively reconfigure their instrument orientations, channels, data collection rates, integration times, etc.

Then there’s another theme that focuses on distributed operator autonomy. I used to co-lead the communication navigation group under the UAV (unmanned aerial vehicle) traffic management project. That project will inform how the government will manage thousands of drones that will be flying in the skies very soon. The UAV project taught me new ideas to build an automated framework so that different entities controlling vehicles could interact with one another in a more efficient way and to share the skies safely. We are now applying that same concept to space traffic management to automate interactions between (currently disjointed) spacecraft operators and providers of services such as space situational awareness, conjunction assessment, space weather forecasts, etc.

Essentially, one half of my life is creating technology by which we can fly sensor webs of multiple satellites, and the other half is creating technology so these satellites don’t collide or radio-interfere!

If they are autonomously designed, is it similar to robotic swarms?

Distributed spacecraft are multiple, free-flying satellites. The way they are arranged structurally in space determines whether it’s a constellation, a formation, or a swarm. If they are in defined orbits – globally distributed – it’s called a constellation, like GPS or Iridium. If they are only loosely connected to each other orbitally and structurally, then it’s a swarm. Many of the cubesats nowadays are deployed as a swarm from the ISS or secondary payloads, but they can spread out evenly using differential drag control. If they have precise intersatellite distances or angles, it’s a formation, like the Prisma mission. So, structural dependency is one of the aspects that determines the name.

What I look at is: irrespective of how they are arranged, when should they communicate with each other or ground control? What should the content be and how can they reactively control their instruments to maximize the collectively retrieved geospatial data? We know some windows of opportunity and power or thermal constraints ahead of time, based on the satellite orbits and bus specs. Using those as inputs, we design algorithms that create a schedule when spacecraft should be talking to each other versus when they should be making observations, how should their attitude orientation change with time, how they should be processing these observations to decide what they should be doing next. It’s a lot like self-driving inter-connected cars, but with the very tight physics constraints and resource constraints of space.

Is it based on distance? How do they decide?

The Earth is so big, so dynamic; it rotates out of sync with satellite orbits — so there are a lot of factors! In a NASA-funded science constellation, you can only economically fly maybe a few dozen spacecraft, and that’s for small spacecraft, by the way. Even when you have a larger number, for example 24, they are thousands of kilometers away from each other. A single orbital plane of 8 spacecraft at a 700 km altitude has the closest pair spaced at 5,000 kilometers away. At 5,000 kilometers when one spacecraft spots something, none of the others see anything yet. It’s the responsibility of the one who spotted something to make an inference based on its observations and communicate that intent on to the next spacecraft. If it’s an interesting observation, the next one can focus on the same spot when they fly over it. But if it’s nothing, they can avoid that spot and focus on another one.

What are you doing with this type of research? Is it a type of global communications link or is it to be used for different science missions? For example, taking pictures of natural phenomena on Earth? Or is it too soon to know?

Adaptive control in spaceflight is a cool new technology. We want to show it’s possible to do these things with currently available hardware. Cubesat radios can function across huge distances and cubesat attitude control systems can re-orient the satellites from horizon-to-horizon in a few seconds. But at the same time, I don’t want it to be only a technology demonstration. I’m a scientist at heart. I like to look at questions that have not been answered because of technology roadblocks and figure out what could be a new observing strategy that could enable new measurements to answer those questions.

Problems in space science have varying spatial-temporal scales, and I try to understand how to autotomize adaptive control to better capture data. The event to be measured could be a transient storm that results in urban flooding. It could be spread of a wildfire. Or for example, soil moisture changes, whose temporal scales are much slower than wildfires or floods. The nature of communication and control depends on event transiency and scale, aside from onboard capacity and ground control availability. Also, when you have so many assets in space, it’s just not feasible to have a human kept in the loop. The question then becomes: How can you use autonomy to command these spacecraft in a more efficient way — but at the same time, add value to certain science questions that could not have been answered if there weren’t so many intelligent, coordinated assets.

The general idea is that you’re not only conducting a technology demonstration now, but also developing it for things we don’t even know exist yet. Like when mobile phones first came out, we had no idea apps would be as big as they are now. But these are measurable results you’re looking at, correct?

Right. Which is why we want to tie it to at least 2 or 3 case studies so people will see value in the technology. I think there is so much that can open up, it is beyond our current imagination.

How would you like to see your area of research grow? You’re looking at some pretty large-scale global issues– monitoring forest fires, loss of agriculture, transportation – but looking at relatable human problems.

I’m trying to look at transient or evolving phenomena that would benefit from autonomy as opposed to localized time and space problems. I am hoping to adapt our autonomy algorithms by applying them to diverse measurements, not just human problems, but also scientific ones that need to measure transient phenomena, like magnetospheric reconnection, or plasma density changes.

What different projects are you working on now?

I have a couple of R&D projects on multi-spacecraft scheduling and adaptive control. I am a systems engineer on the Distributed Spacecraft Autonomy experiment that will fly onboard the Starling mission. The goal is to control a reconfigurable swarm with minimal ground supervision.

Aside from this, I work on a space traffic management project. We have maybe over 2,000 operational satellites. But, if you look at all the defunct satellites and the debris, there are more than 20,000. Looking at the news from the last year, there’s going to be 20,000 new spacecraft in the next 5-10 years. These will be launched by companies who are looking to provide services, such as internet, in remote areas. We don’t have a framework in place to control all this traffic in space. We’ve started developing APIs and building a prototype software that will allow spacecraft operators and service providers to interact and even negotiate in a more automated way to make space more efficient, useable, and safe for future generations.

Our STM lab has a large Hyperwall with workstations representing some key components, and we’re setting up the software prototype to demo automated interactions in simulated scenarios. We want to build a marketplace for services, just like the automotive industry used to be 100 years ago. But this time it’s going to be for space, to lower the barrier of entry for new providers. If you want to set up your own dish and monitor space traffic you can do that by connecting to the STM system. This project is less than a year old. It’s been an adventure ramping up and we’re publishing articles to share the insights. (The directive comes from here: https://www.whitehouse.gov/presidential-actions/space-policy-directive-3-national-space-traffic-management-policy/)

Do you work with a lot with Earth Scientists?

I work primarily with Earth Scientists, and a little bit with heliophysicists and planetary scientists.

That’s exciting. You’re a scientist at heart, but you’re an engineer as well. How did your background lead you to doing this type of work?

I did my Masters and PhD in aerospace space systems engineering from MIT. And my bachelor’s degree is in exploration geophysics, but my focus was more on applied physics. I like answering nature’s fundamental questions as well as building new things. I looked at putting that together in my career: to build new things to answer those questions.

Have you had to overcome gender barriers? What advice would you give early career people who are looking to get into STEM but are not sure what path to take?

I grew up in an all girls’ school, and then went to an undergrad institution with 5% women. Knowledge of gender-based perceptions came to me very late in life, but hit quite intensely when I realized. While their nature is quite dependent on culture (U.S. vs India, where I grew up), gender barriers and glass ceilings do exist. It’ll take generations to fix, but luckily, more resources are available for women now than ever before in history. I think it’s a great time to pick up STEM as a career, or any career which was not traditionally held by women.

I’ve definitely benefitted from having great mentors. My mother has been my strongest influence and pillar. Bosses I’ve worked with, colleagues or friends, even classmates, they all have been supportive, whether I reached out to them directly or not. Making yourself part of a diverse peer group helps. Being part of more than one project helps in that you can see how one part of a project is applicable to another, culturally and otherwise. Having open-minded scientists and thinkers around you helps, as well.

Was there anything that surprised you?

We are in a generation where knowing how to code is as important as any natural language. That’s not just for astronomy or spacecraft engineering; that’s true for pretty much anything. We have access to so much data! To be able to extract information from it all and provide actionable intelligence to so many machines, you just have to know how to code them. I’d say that was a crucial realization for me more than 10 years ago.

What’s a bit more recent, that surprised me at NASA, was the sparse interaction between engineers and scientists, other than for specific missions. Because NASA is primarily science driven, it’s important that the conversation happens more often than it does. It can be very siloed, and there are few avenues where knowledge exchange can actually happen. Often it is fairly qualitative – we call them cocktail napkin level discussions. Instead, I’d like access to people’s models and get out actual numbers. How can we make APIs to connect our models? Then, I can tell you how my stuff can improve with yours and vice-versa.

Do you feel like a bridge between both of these worlds as you have experience in both? You’re able to speak the lingo?

So that’s something I never expected to be doing, but I love that it happened [laughs]. I thought to myself, okay, I’m going to be building spaceships and all these cool things, then I thought, “Wait a minute, what’s the science problem here?” And it led me to a whole new world of translating between the two [laughs].

Do you feel working at NASA Ames helps with that interdisciplinary approach?

People here are open about discussing their research and contributing to yours. So, it helps, having different types of opinions. I’ve also witnessed a couple of very strong women over the course of my career at NASA, whom I’ve watched as they built their own careers. Gives us all a chance to look up to them and think, Well I want to be like that! So that helps.

If she can see it, she can be it.

Definitely. I think overall, telling yourself that… I want to be in a positive environment that keeps me pushing through the challenges. It’s an intellectually difficult path. There’s no instant gratification in science. You just have to slog yourself through years of work and maybe something will come out of it [laughs]. Even small successes are incredibly fulfilling and worth the effort, that’s what keeps me going.

Was there any particular moment when you thought, Hang on, I can do this as a career?

Back in my undergrad years, the team working on Hubble just released its ultra-deep field images and they were beautiful, visually stunning. But aside from the aesthetics I realized that we were looking into a field full of stars that had emitted their light at varying temporal scales. What we are seeing is the capture of light that has reached us at the same time. But the light started from those stars probably billions of years apart. What we’re seeing on the screen is an illusion. For me, that idea was quite “deep” (pun intended). We’re able to look back in time – and varying degrees of time – through one image.

I was naturally interested in the technology that made this possible. I started looking at telescopes, then satellites that made such telescopes. So, I got interested in space through the Hubble deep field. The more I discovered about space, the more I wanted to know. And the better it got over time.